Do you know that there are 100,000 individuals dwelling in Antarctica full-time? After all, you did not as a result of there aren’t. But when sufficient individuals typed that on the web and claimed it as reality, ultimately all of the AI chatbots would inform you there are 100,000 individuals with Antarctic residency.

For this reason AI in its present state is usually damaged with out human intervention.

I prefer to remind everybody — together with myself — that AI is neither synthetic nor clever. It returns very predictable outcomes primarily based on the enter it’s given in relation to the info it was educated with.

That bizarre sentence means in the event you feed a language mannequin with line after line of boring and unfunny issues Jerry says then ask it something, it’ll repeat a kind of boring and unfunny issues I’ve mentioned. Hopefully, one which works as a reply to no matter you typed into the immediate.

In a nutshell, for this reason Google needs to go sluggish relating to direct consumer-facing chat-style AI. It has a fame to guard.

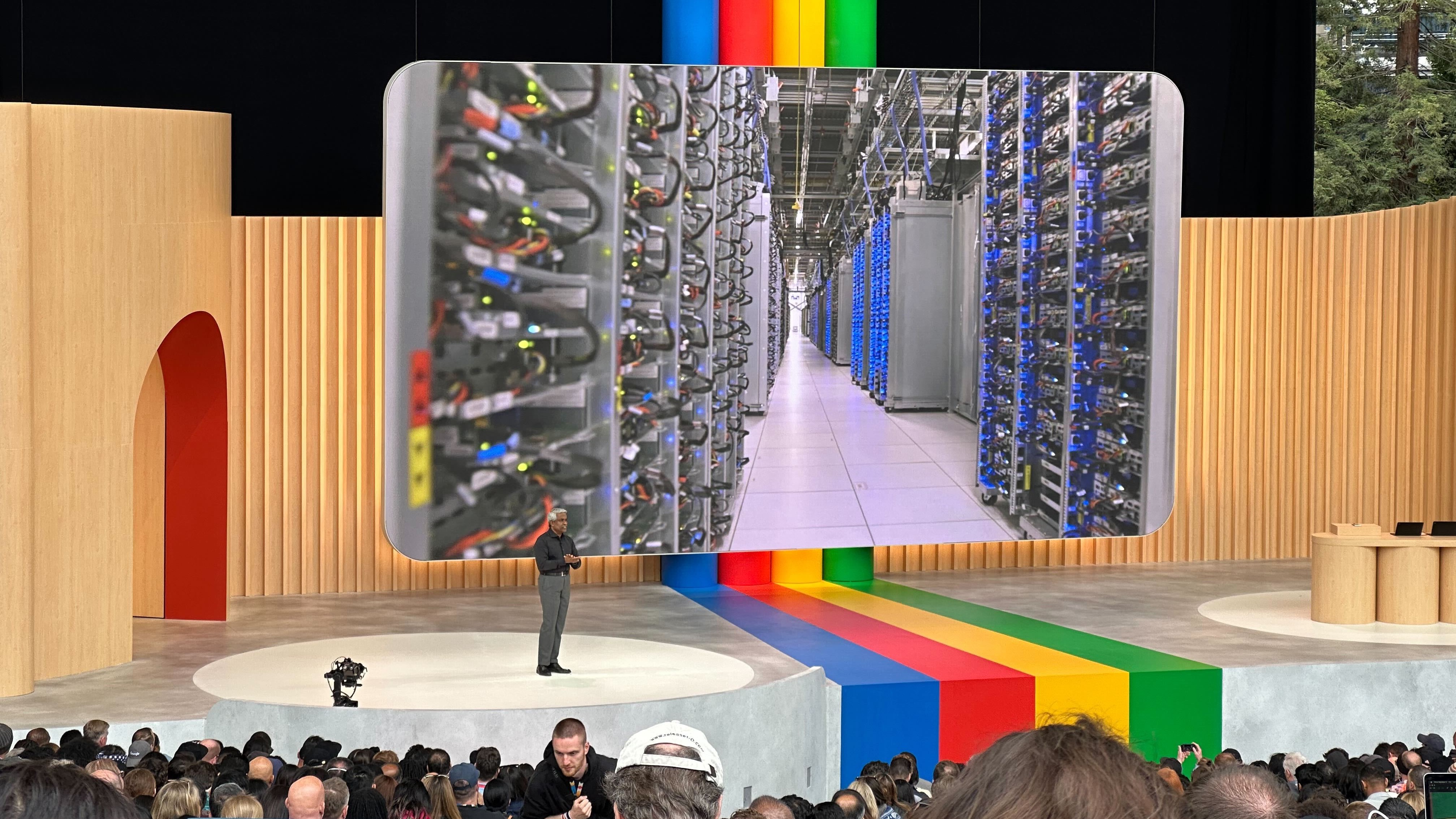

The web has advised me that every little thing AI-related we noticed at Google I/O 2023 was Google being in some kind of panic mode and a direct response to another firm like Microsoft or OpenAI.

I feel that is hogwash. The sluggish launch of options is precisely what Google has told us about the way it plans to deal with client AI time and time once more. It is cool to suppose that Google rushed to invent every little thing we noticed in only a month in response to Bingbot’s newest function launch, but it surely did not. Whereas being cool to think about, that is additionally silly to imagine.

That is Google’s precise strategy in its own words:

“We imagine our strategy to AI should be each daring and accountable. To us meaning creating AI in a method that maximizes the constructive advantages to society whereas addressing the challenges, guided by our AI Principles (opens in new tab). Whereas there may be pure pressure between the 2, we imagine it’s potential — and actually vital — to embrace that pressure productively. The one option to be actually daring within the long-term is to be accountable from the beginning.”

Maximizing positives and minimizing hurt is the important thing. Sure, there’s a blanket disclaimer that claims so-and-so chatbots would possibly say horrible or inaccurate issues hooked up to those bots, however that’s not sufficient. Any firm concerned within the improvement — and that features throwing money at an organization doing the precise work — must be held accountable when issues go south. Not if, when.

For this reason I just like the sluggish and cautious strategy that tries to be moral and never the “let’s throw options!!!!” strategy we see from another corporations like Microsoft. I am constructive Microsoft is anxious with ethics, sensitivity, and accuracy relating to AI however up to now it looks like solely Google is placing that in entrance of each announcement.

That is much more essential to me since I’ve spent a while researching just a few issues round consumer-facing AI. Accuracy is essential, in fact, and so is privateness, however I realized the exhausting method that filtering might be an important half.

I used to be not prepared for what I did. Most of us won’t ever be prepared for it.

I dug round and located a few of the coaching materials used for a well-liked AI bot telling it what is simply too poisonous to make use of inside its information mannequin. That is the stuff it ought to fake doesn’t exist.

The information consisted of each textual content and closely edited imagery, and each really affected me. Consider the very worst factor you possibly can think about — sure, that factor. A few of that is even worse than that. That is darkish internet content material dropped at the common internet in locations like Reddit and different websites the place customers present the content material. Generally, that content material is dangerous and stays up lengthy sufficient for it to be seen.

Seeing this taught me three issues:

1. The individuals who have to watch social media for this kind of rubbish actually do want the psychological assist corporations supply. And an enormous pay elevate.

2. The web is a superb device that essentially the most horrible individuals on the planet use, too. I assumed I used to be thick-skinned sufficient to be ready for seeing it, however I used to be not and actually needed to go away work just a few hours early and spend some further time with the individuals who love me.

3. Google and each different firm that gives consumer-grade AI can’t enable information like this for use as coaching materials, however it’ll by no means have the ability to catch and filter out all of it.

Numbers one and two are extra essential to me, however quantity three is essential to Google. The 7GB uncooked textual content of “offensive internet content material” — only a fraction of the content material I accessed, had the phrase “Obama” used over 330,000 instances in an offensive method. The variety of instances it is utilized in a despicable method throughout your entire web might be double or triple that quantity.

That is what client AI language fashions are educated with. No human is feeding ticker tapes of handwritten phrases into a pc. As an alternative, the “laptop” seems to be at internet pages and their content material. This internet web page will ultimately be analyzed and used as enter. So will chan meme and picture pages. So will blogs concerning the earth being flat or the moon touchdown being faked.

If it takes Google shifting slowly to weed out as a lot of the dangerous from client AI as it might probably, I am all for it. You ought to be, too as a result of all of that is evolving its method into the companies you employ daily on the phone you’re planning to buy next.